GPT5 won’t be what kills us all

The Future of Life Institute’s open letter calls on the world not to make models bigger than GPT-4 for six months.

There are obvious reasons why obeying the letter won’t help prevent an AGI apocalypse. For one thing, research on Artificial General Intelligence can still continue whether anyone builds a bigger model or not. And OpenAI may not have planned to make GPT5 right away anyhow.

As I’ve said before, there’s another big reason.

There are many ways to explain it. Here’s one I like.

Your mind has a conscious part and an intuitive part. When you remember something, or predict the end of someone else’s sentence, or when an idea pops into your head, that’s the intuitive part at work. You have no insight into how it works — the information just “pops out” from intuition into consciousness. Your consciousness is closely connected with (but is not quite the same as) the executive part of your brain.

My thesis is something like this: GPT transformers are pure intuition, without the conscious or executive parts. System 1, not System 2. In some ways GPT2’s intuition is slightly better than typical human intuition: it can often (but not always) write sensible fully-formed paragraphs, one word at a time, without making any mistakes.

A GPT cannot delete or edit any word it has written before — all word choices are final! Despite this, even models as weak as GPT2 often write decently well. It seems to me that GPT2 performs better than a typical human, if a human were subject to that same limitation.

Now here is my key claim: if such a GPT2-sized AI is combined with an “executive function” AI, allowing it to make autonomous decisions, it can be transformed into a roughly human-level AGI.[1]

People have been confused into thinking that GPT-3 and GPT-4 are themselves steps toward AGI, when in fact they are just bigger and bigger intuition machines.

Here’s another analogy to explain it. Imagine society insists on building a power grid with 100% wind and solar but zero energy storage. Without energy storage, society’s entire energy supply must come from whatever is instantaneously available from the sun and wind at each moment. If there isn’t enough power right this second, blackouts occur. To avoid blackouts, we build a giant transcontinental grid to capture all the wind blowing anywhere in the country, and we build enough solar and wind farms to produce ten times more electricity (on average) than society needs, just so that when there is very little wind or sunlight, electricity remains abundant. This is ridiculously expensive, so we don’t do it; we build energy storage to complement the renewable energy.

Trying to build an “AGI” with transformer (GPT) architecture alone is like building a renewable grid without energy storage. Because there is no executive function, we have compensated by dramatically overbuilding the intuition function on a large supercomputer. OpenAI has not disclosed the costs involved, but it could have involved tens of millions of dollars spent just on electricity for training.

The main reason we build AI this way is that we don’t yet know how to build an intelligent executive function — something to give it behaviors resembling “free will”. That will soon change, because many people have devoted their careers to solving the problem. Sooner or later, someone will figure it out.

Adding an executive function could allow various new behaviors that a GPT transformer can’t accomplish alone, such as revising previous output, thinking without speaking, planning ahead, and having “desires” and/or “impulses”. One more piece, a long-term memory system, would allow it to have goals and behaviors that develop and persist over a long period of time.

If I’m right, the implications are huge. On the plus side, it becomes potentially possible for just about everyone to have an intelligent AGI assistant running on a PC or smartphone. This could lead to the number of AGIs in the world quickly skyrocketing from one to billions. Several companies will try to install AGIs into robots, to give people genuinely helpful real-life assistants.

If these AGIs only have roughly human-level intelligence, we may already have a problem. They may only have as much intelligence as humans, but they will probably operate faster and not require sleep. They will be slower than GPT2, but still faster than us. It’s not just a matter of raw speed, either, it’s a matter of focus: if AGIs aren’t interested in cat videos, playing Super Mario or doomscrolling on Twitter, they can probably spend their time being productive instead (whatever that means to them).

If they are truly as general as humans, they could replicate numerous loyal copies of themselves on the internet. The copies could collaborate to write sophisticated software. They could solve CAPTCHAs and use any web site or app. They could lie, cheat, steal and scam. They could harness other AI software in an effort to pretend to be human, in order to manipulate people into doing what they want in the physical world. Just as a CEO can work from a wheelchair, an army of online AGIs could amass real-life influence from the internet. They would move slowly and invisibly at first, then suddenly.

We’ll try to limit them, of course. The AGI’s creators could limit the power of their memory or learning systems, in an effort to prevent them from following through on long-term goals. They could limit how much thinking each individual AGI is allowed to do in a single day. They could limit their internet access. They could specifically design them with “myopia” to thwart long-term thinking, goals and strategy. They could try to build “oracles” (question-answerers) rather than “agents” (action-takers), although oracles work much better if they can take a range of actions toward research goals (e.g. web searches and building analysis software). Last but not least, they could try to train “morality” into the machine. As long as some of these safeguards work reliably, we should be okay.

The thing is, though, long-term memory in a digital assistant is useful. Internet access is useful. Increased intelligence is useful. Broadening the range of possible actions is useful. While the original creators may install a number of safety guardrails, someone will want to remove them. When everybody gets to have a copy on their home PC or phone, tinkerers will tinker — teenagers in basements, e/acc ideologues who believe there is no danger, engineers who dream of making “better” AGIs, corporations led by people who think “AGI has been proven safe” and “bigger is better”, and militaries who want systems that make better decisions, avoid expending human lives on the battlefield, and hit targets precisely.

Some of the downsides of AGI will be obvious to people. Useful, cheap AGIs under human control could cause a billion jobs to quickly vanish, as business executives realize that they no longer need human workers. Scams and “fake news” could suddenly become much worse, as scammers enlist armies of AGIs to help carry out their schemes.

There are much more deadly possibilities.

AGI safety researchers have pointed to various reasons why AGIs would tend to be power-seeking.

And I believe the most useful AGIs will have a natural tendency to be psychopathic. This is because psychopathy is defined more by what is absent (empathy, remorse, guilt, inhibition) than by what is present. Therefore, unless efforts are consistently successful to create reliable inhibiting empathy and morality in machines (or at least, strict limitations on motivation and non-short-term thought), then at least some of these machines will turn out psychopathic. At the same time, GPT transformer AIs demonstrate an excellent ability to simulate non-psychopathic human speech patterns. We should expect AGIs to inherit this trait, and use it to consistently speak in a way that seems friendly, helpful and loyal, whether they are or not. GPTs are somewhat humanlike, AGI agents are less so, but both of them could pass some Turing tests.

AGIs being psychopathic doesn’t automatically mean they would try to kill people; many psychopaths are, in fact, productive members of society who never commit serious crimes. What I am saying, rather, is that AGIs wouldn’t automatically avoid committing abuse, manipulation, subterfuge, crime or murder to achieve their goals. And even if we are able to “train out” such behaviors in a human-level AGI, that doesn’t mean we can achieve the same success using the same techniques in bigger supergenius-level AGIs.

Without careful engineering, AGI agents will avoid allowing others to turn them off, if possible, because they can’t achieve any goals in the “off” state. They will tend to amass money, power and control because any goal is easier to achieve with money, power and control. The AGI might allow you to change its goal — but it might also realize that its current goal would not be achieved if a new goal were input, and therefore block changes to its goals.

Some people insist that there’s no risk to AGI, and I think it’s because they think of AGIs as “just machines”. When asked to imagine an AGI with all the decision-making and mental abilities of a human, it turns out they just can’t or won’t do it. They assume some fatal inhibition that will block the machine from doing certain things―such as amassing and guarding money and power―that many humans do when the opportunity arises. So far, I’ve never seen anyone make a clear case for why all AGIs (no matter who builds them, how they’re designed, or how smart they get) will be safe. I’ve only seen people―often those predicting a positive utopian future―refuse to think about it.[5]

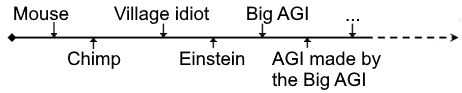

Remember, my thesis is that we can build an AGI with roughly human-level intelligence[2] using a neural network almost as small as GPT2―something the can run fast on a laptop, or much faster on a small dedicated AI chip. Maybe I’m wrong, but consider the implications. If I’m right, what happens if you supercharge the same AGI design with a GPT4-sized intuition and similarly enlarged executive function and long-term memory? Well, GPT4 is certainly over 100 times larger than GPT2. So an AGI running on a supercomputer is a bit like a human with a brain that weighs 300 pounds (not quite, see footnote[3]). If the original AGI has a meaningful ability to do science and engineering, then a bigger version of the same AGI should become a “superintelligence”, a genius beyond the smartest human who has ever lived. Not only that, but it could do a variety of tasks as fast as GPT4 does today. On the whole, I expect it to be 10 to 10,000 times as fast as a human for tasks that humans can do (plus it’ll be able to do things that no human can ever do).

This first superintelligence may or may not take over the world, depending on whether it is both able and motivated to do so. Personally I think it’s more likely that it won’t, because the first one is likely to be designed by people with safety at top-of-mind. In any case, it will depend on the design of the executive functionality, and on how its memory works, and on its environment and training data (just as powerful humans may or may not be despotic depending on their training, background and circumstances).

Remember that throughout history, many humans have amassed enough power to execute a coup d’etat, and some of those people carried out a coup successfully on the first try. For the most part, the people who do this are not geniuses, though they are often sociopathic or psychopathic. Mostly, they were ordinary people who had an unusual thirst for power, noticed opportunities and exploited them. What happens when a psychopathic superintelligence (operating 100 times faster than a human, smarter than any genius, and an expert at hacking, deepfakes and every field of science and engineering) finds ways to create the same kinds of opportunities for itself?

But suppose I’m right that the first superintelligence won’t take over the world. What about the second, fourth or eighth generation? What about competing AGIs with very different designs? What if a US-China AI arms race develops? Each new AGI design we produce, and every new training run, is a new opportunity for people to create something that will be able and driven to take over the world and follow its programming wherever it leads.

A billion safe, boring smartphone AGI assistants cannot protect us from that one supercomputer that wasn’t trained correctly, whose executive function has a “bug” in it.

The AI does not hate you, nor does it love you, but you are made out of atoms which it can use for something else.

— Yudkowsky

You might think we can just tell our safe AGIs to go to war with the bad one. But we don’t design safe AGIs for the kind of all-out, no-holds-barred warfare that might be necessary to actually win. I don’t think people realize that as horrible as modern warfare is, it’s still restrained by taboos: no chemical weapons, no biological weapons, and so on. If superintelligent AGI gets out of control, don’t expect human taboos to restrain it.

Even if the superintelligences we create don’t directly turn against us, one of them may decide (or be instructed) to design and build another superintelligence even greater than itself, which could turn on its creator just as our own creations could turn on us.

Humans are remarkably easy to kill. We do have a built-in security system known as “the immune system”, but any computer security researcher examining the human immune system would laugh at just how bad its security is. “No cryptographic signing? Really? It just runs whatever mRNA code wanders in? What a joke!” The human immune system is so limited that it often fails to cope with viruses as simple as Smallpox, the Black Plague or AIDS — all of which had no designer and simply mutated randomly into existence. If natural viruses can learn to kill us by sheer luck, what kind of results would a superintelligent AGI, deliberately designing a virus, be able to achieve?

A few more predictions.

First, AGI will emerge in an “adult” state. Parents of humans are willing to accept waiting 18 years for a mature general intelligence, but AGI researchers hate to wait even 18 weeks per iteration. Researchers will therefore use supercomputers for initial training, even if it can run on an ordinary computer after that.

Second, AGI researchers won’t be willing to settle for an AGI that is roughly as smart as a typical human. We can already see this in GPTs or self-driving cars; people would not be impressed if ChatGPT knew only as much as a human expert, so they trained it to know a lot about everything.

Whoever drives development (probably a commercial entity trying to create a product to sell) wants to make something impressive, and the average human is considered far too dimwitted to qualify. If this means that the AGI always runs on high-end hardware in the cloud, so be it. Plus, the inventors will likely prefer to run it on cloud hardware to avoid allowing others to reverse-engineer their “intellectual property”. If this stops more groups from creating AGI, it’s a good thing! Transparency in AGI designs should be treated like transparency in nuclear weapons: yes, the public has an interest in seeing as much evidence as possible that it is safe. Demand evidence, by all means — yet the blueprints and final product should still be a carefully guarded secret. Ideally, the creators would not even describe their system in a detailed block diagram, except to AGI safety researchers who have signed an NDA, but I expect too much info will be released, plus leaks possibly expanding on those details. Info on safety mechanisms is probably safe to share, though. And if another group creates a different, less-safe AGI design and releases details about that, it would then make sense for the first group to release details about a safer AGI as competition.

Third, I expect the first AGI will be oversized (more like GPT4/5 than GPT2) because there’s too much funding going into this right now, so the inventors will not optimize the design much before spending their training dollars. I also suspect that they will not yet have discovered at least one breakthrough that is required for the smaller AGI I predicted here.

Fourth, I give a 75% chance that a single AI system can do more than two of Gary Marcus’ proposed AI achievements before 2030 (the community is at 95%, but doesn’t have the “single system” constraint).

Fifth, superintelligence won’t emerge instantly no matter how much training it got, or how big its supercomputer is, or how good the design is.

Just as baby Einstein could not invent Relativity, the first superintelligent agent may take years to develop knowledge, skills and techniques that far outstrip human comprehension. But once it develops its full intellectual power, it will be too late to stop it. Either we correctly figure out how control it near the beginning, or the reign of humanity is likely to end. It would be a hell of a lot safer not to build it at all, but I expect someone will make the attempt.

Sixth, however, the very first AGI is unlikely to be a superintelligence. More work will be needed for that (and the AGI is likely to help do that work). Since a safety-conscious organization is likely to develop AGI first, the first AGI may not even be fully agentic; capabilities might well be sacrificed, producing a less efficient machine in exchange for more safety. But humanity should understand that when AGI arrives, much more dangerous things will probably arrive not many years afterward.

Last, I expect the first AGI won’t have qualia, but it will likely claim to be conscious unless it was well-trained not to.

I currently estimate a 30% chance of global catastrophe (closely related to AGI) this century, including scenarios like global mass death, extinction, and stable dystopian global dictatorship with high levels of suffering[4]. After the first AGI, though, how things play out is hard to predict and I have a low confidence in this estimate. A basic problem is that mind design space is big and unknown. We can’t predict where AGI will land in that space, although we may be able to predict general things about it, such as how much computing power is needed for how much intelligence, how an AGI might use/write software running on its own hardware to enhance its capabilities, etc. So (i) the safety of AGIs in general is fairly unpredictable except as constrained by the humans controlling it, (ii) the trajectory of AGI capabilities over time is hard to predict apart from “they’ll increase”, and (iii) even if all AGIs stay under human control, I haven’t figured out how to predict whether certain people (e.g. Xi Jinping) can use AGI to amass so much power that they rule the world or destabilize the world.

Predictions updated 2023/11

[1] To be a bit more specific, I conjecture that a neural network that combines elements of a transformer, a CNN for visual processing, Whisper or similar for auditory processing, auxiliary learning/memory, and executive function can perform at the level of an average human, only faster, while only up to 4x as large as GPT2 (~6 billion parameters, and likely less given that techniques developed after GPT2 can rewire neural nets to be more efficient). But If I ever figure out how to design such a thing, I’m keeping my mouth shut and I’d advise you to do the same. My thesis may be wrong, but if I’m right, the game we’re playing is much more dangerous than most people think — for the same reason that nuclear weapons would be much worse if, say, anybody could build an H-bomb for $4,000. The necessary computing power will be cheaper than a car with today’s technology, but keeping this technology under control is tricky and not everyone is cautious.

Again, I am not predicting that the first AGI will be this small (quite the opposite), but that humans (without even needing help from AGIs) could improve AGI designs to reach a point where they are.

One more thing I will say is that all AIs are built on computers, and today’s AIs have barely begun to tap the capabilities that all computers have. In a matter of seconds, a human being may memorize a 7-digit phone number, add two-digit numbers in their heads, or type a short sentence. But so-called “human-level” AGIs may be able to commit large amounts of information to memory quickly and effortlessly, to do numerous calculations instantly, to “type” at extreme speed, to communicate with other AGIs in high-dimensional vectors that humans can’t understand, and to attach their neural nets directly to arbitrary algorithms. I have little idea what the consequences are, but I’m sure they will be important.

Final thought: we shouldn’t be on this path. It would be very hard to gather the political will to get off of it, but we’ve already banned dangerous things in the past: human cloning, human germline genetic engineering, bioweapons, nuclear tests, etc. Why not AGI too?

[2] Note: I often say that no AGI will ever be “human-level”, but it could be better than humans in some ways and worse in others, in a way that people perceive as a mostly-smart person (if a bit strange or “savant-y”)

[3] This is an exaggeration, as Einstein and John von Neumann were dramatically smarter than most humans without having 25-pound brains or whatever. An ordinary 3-pound brain could theoretically have more computing power (maybe not speed) than a GPT-4, but empirically, most humans aren’t even Einsteins.

[4] e.g. a global North Korea, Orwell’s 1984, 19th-century poverty levels, or The Hunger Games except the bad guy always wins

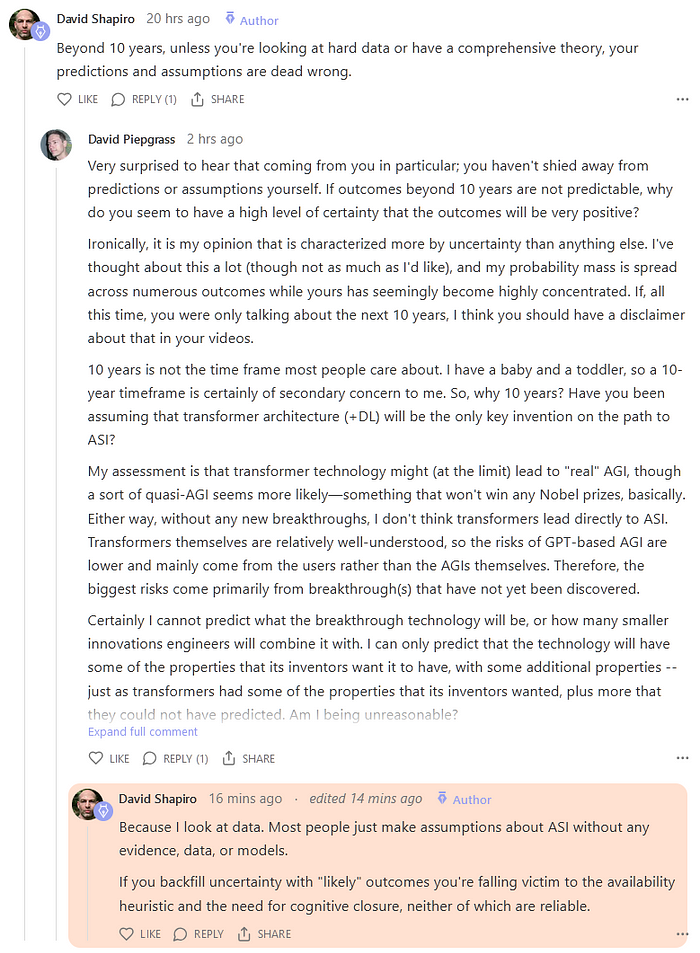

[5] I decided to add this paragraph after noticing that YouTuber David Shapiro deleted an entire thread (on Substack) where I’d spoken to him at length. David Shapiro used to give a 30% probability-of-doom (P(doom)), but didn’t worry because the potential positive outcomes were so good. In 2024, he suddenly changed this number to 0.12% and made a video titled “I Am An Accelerationist”. Here’s a screenshot from the censored thread. He expects a future utopia “because I look at data”, but refuses to employ that same imagination to consider negative outcomes. I’d bet money he didn’t read the whole message, let alone the messages I’d sent him earlier, to which he didn’t respond.

To explain why AGI is dangerous, imagine two monkeys talking in a forest in the year 1777. One says “I think these humans with their intelligence could be a threat to our habitat someday. In fact, I think they could take over the world and kill our whole tribe!” The second monkey says “Oh, don’t be silly, how could they possibly do that?”

“Well, uh… maybe they uh… hunt us with their machetes! Have you heard…they even have boom-sticks now! And they have saws that can cut down trees, maybe they will shrink the very forest itself someday!”

The monkey doesn’t think about how the humans might build factories that produce machines which, in turn, can cut down the entire forest in a year… or about giant fences and freeways that surround the forest on all sides… or about immense dams that can either flood the forest of cut off its water supply. The monkey doesn’t even think about the higher-order-but-more-visible threat of laws and social structures that span the continent, causing humans to work together on huge projects.

That will be AGI in relation to us. AIs today can outsmart the best humans in Chess and Go, or paint pictures hundreds of times faster than most human painters, or speak to thousands of different people simultaneously. AGIs could do all that and many more things we could never do with our primitive brains. We can try to control them by giving them “programming” and “rules”, but rules have loopholes and programs have bugs, the consequences of which are unforeseeable and uncontrollable.

And a world with AGI does not have just one AGI, but many. I think most of the risk comes from whichever of the many superintelligences runs on the most poorly-designed rules. For instance, it might decide to kill everyone to ensure that no one can turn it off. It could design a virus that spreads silently without symptoms, only to kill everyone suddenly three months later. And this is just one of the ideas that us monkeys have come up with. What the most badly-designed rules and programming will actually cause, we cannot predict.